Chat with a data expert: 888-378-1920

Improving Data Accuracy

Streamlining Pharmacy Data Management through Snowflake Optimization

EXECUTIVE SUMMARY

This case study explores the challenges and solutions involved in overhauling the data management system for a major pharmacy client.

Plagued by inefficient processes and data inaccuracies resulting from the use of ephemeral Spark clusters and Redshift, the client faced excessive operational costs and prolonged ETL (Extract, Transform, Load) execution times, often exceeding 24 hours.

By transitioning to a managed Snowflake environment with dbt for the transformation

and Airflow orchestration, the client achieved a significant reduction in costs and improved data integrity

BACKGROUND

The client is a leading entity in the pharmaceutical sector, relying heavily on data analytics to inform decision-making.

Their existing data management setup included ephemeral Spark clusters used in conjunction with Redshift for data processing.

However, the architecture presented major drawbacks:

Inefficiency:

With hundreds of scripts running on Spark, it was a Herculean task to troubleshoot issues.

Extended ETL Processes:

ETL operations routinely exceeded 24 hours, hindering timely data availability

Data Inaccuracies:

The model, which consisted of flat tables within a data lake, was susceptible to inaccuracies and duplications.

PROBLEM STATEMENT

The inefficient data management system resulted in inflated costs due to unoptimized resource usage.

Moreover, the data inaccuracies compromised the quality of analytics, leading to potential errors in strategic decisions.

The client’s reliance on ephemeral Spark clusters coupled with Redshift for data processing was fraught

with challenges. These clusters, by design, lose their state and any temporary objects upon shutdown.

This behavior resulted in additional complexity:

State and Data Loss:

Each termination of a cluster led to the loss of state and temporary data objects, necessitating a complete rebuild in subsequent runs, which was both time-consuming and resource-intensive.

Troubleshooting Difficulty:

The transient nature of these clusters compounded the already arduous task of troubleshooting hundreds of scripts, as the ephemeral environment lacked persistence for in-depth analysis post-execution.

OBJECTIVES

The primary goal was to reduce operational costs while enhancing data accuracy and processing times.

The specific objectives were:

To decrease ETL run times significantly from over 24 hours.

To eliminate data inaccuracies and duplications.

To streamline the troubleshooting process for data scripts.

To reduce overall data management costs by a substantial margin.

METHODOLOGY

The approach to achieving these objectives was twofold:

Transition to Airflow/dbt and Snowflake: Migrate from ephemeral Spark clusters to a managed Snowflake environment, orchestrated by Apache Airflow with dbt.

Data Model Restructuring:

Redesign the data model to eradicate flat table structures in favor of a more robust, accurate schema.

IMPLEMENTATION

The migration involved several key stages:

Assessment:

An initial evaluation revealed underutilized Spark clusters, accruing unnecessary costs.

Strategy Development:

A plan was devised to transition hundreds of scripts to dbt and orchestrated by Airflow workflows.

Execution:

Careful migration was undertaken to ensure minimal disruption to ongoing operations.

RESULTS

Post-implementation, the results were transformative:

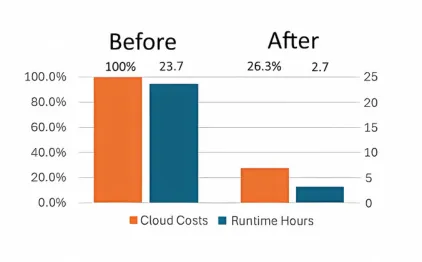

Cost Reduction: The client observed over 75% savings in data management costs.

Efficiency Gains: ETL run times were substantially reduced.

Accuracy Improvement: The new data model eliminated inaccuracies and duplications.

Resource Optimization: Idle Spark clusters were identified and decommissioned, leading to a direct saving of $7,000 per month

CONCLUSION

The strategic shift to a managed Snowflake and a dbt/Airflow environment not only achieved significant cost reductions but also paved the way for more reliable data analytics. This case study demonstrates the potential for large-scale data operations to become more cost-effective, accurate, and efficient through thoughtful architectural changes

© 2026 Macer Consulting • All Rights Reserved.